The problem

Optical tracking systems are able to gather information about trackers at an impressive speed (those we have in the labs go up to 360 fps).

The informations provided by those sensors we mostly care about are :

- Marker positions

- Marker rotations

- Joint lengths

This animation was a pretty smooth one, without any big defects, let's see a harder case :

This animation was a pretty smooth one, without any big defects, let's see a harder case :

Notice the flickering! This occurs when less than 3 cameras can see the markers, causing a complete loss of information... this is what we want to fix.

Notice the flickering! This occurs when less than 3 cameras can see the markers, causing a complete loss of information... this is what we want to fix.

Addressing the problem

A possible solution to the problem is to apply some sort of external tracking, so that when the sensors' information is loss we can try to predict it.

A simple way to achieve so is the application of naive filters! We propose 2: a Kallman filter (the most popular and obvious) & a particle filter.

Starting with the Kallman filter :

It's noticeable some shaking, but considering the motion's impredectability and the fact there's not anymore processing other than the filter application that's quite nice.

It's noticeable some shaking, but considering the motion's impredectability and the fact there's not anymore processing other than the filter application that's quite nice.

Switching to the particle filter version :

The particle filter's nature makes its stochastic behaviour not really suited for tracking rigid bodies, especially since we can't really retain any body structure's information, as there's no correlation between particles tracking one marker & another.

The particle filter's nature makes its stochastic behaviour not really suited for tracking rigid bodies, especially since we can't really retain any body structure's information, as there's no correlation between particles tracking one marker & another.

Other more complex objective functions which regulate the particles behaviour might be more suited for the job of course.

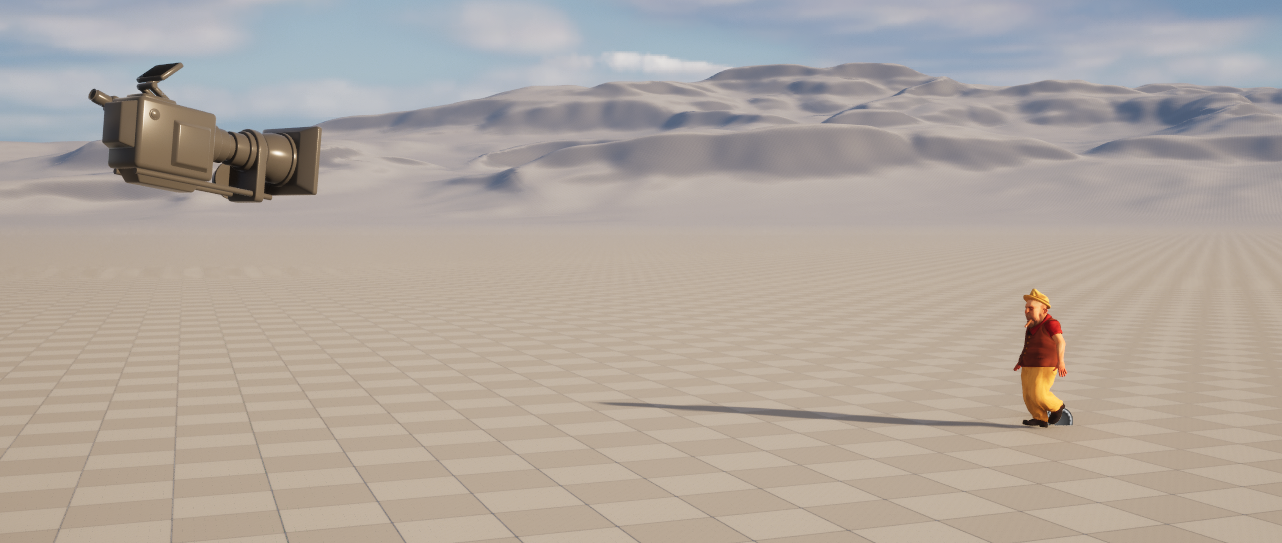

Unreal Engine simulation

By simulating a virtual environment inside Unreal Engine 5.4 (UE) the goal is to extract pose informations necessary

to achieve 3D to 2D projection onto the camera image plane of all the joints togheter with the skeletal structure.

That was our first time playing around with UE, but after a while we got the hang of it : UE makes it possible to interact with level (scene) components either

via C++ code, or blueprints (BP) (visual representation of code functions via node graphs). As it was our first time with the Engine we went for the BP approach.

As first we properly modelled the scene inserting 2 core blueprints, one containing our main actor and the other containing the camera.

Using the animation retargeting feature provided by UE5 we were also easily able to map the provided animation onto another free skeleton from

Adobe Mixamo characters

properly positioning actors in the scene a LevelSequencer component allows to capture a video using

the virtual camera. Using the blueprint engine together with Json blueprint utility plugin

we implemented a script to extract the following data in Json format.

properly positioning actors in the scene a LevelSequencer component allows to capture a video using

the virtual camera. Using the blueprint engine together with Json blueprint utility plugin

we implemented a script to extract the following data in Json format.

- bones (∀.frames, ∀.bones ∈ skeleton) : {X, Y, Z} position, {X, Y, Z, W } rotation (quaternion), bone name.

- skeleton base frame (∀.frames) : {X, Y, Z} position, {X, Y, Z, W } rotation (quaternion).

- camera (once, camera is fixed) : {X, Y, Z} position, {X, Y, Z, W } rotation (quaternion), FOV, aspect ratio.

Visualization also available HERE

3D to 2D projection

We decided to go for openCV as image processing framework as it provides all we need to perform a 3D to 2D projection of a set of points into the camera image plane.

We had some stuff to deal with first :

UE5 and openCV use 2 different coordinate systems as :

- UE5 is left handed : with {+X = forward, +Y = right, +Z = up}

- openCV is right handed : with {+X = right, +Y = down, +Z = forward (depth)}

Knowing the world coordinate references to both the camera and joints we just need to extract the camera intrinsics and compute the projection. Camera intrinsics are generally extracted via camera calibration, but as this is a controlled environment with no distortion we can directly compute them with some algebra. computing the transformation for each point with the cv.projectPoints() we're able correctly displace the skeleton on the image plane.

Bonus

Instead of evaluating results on Matplotlib it would be much better to forward data to a more suitable environment such as Blender. To achieve so we used as basis the deep-motion-editing repository which provides a framework to build skeleton aware neural networks by interacting with Blender python APIs.